Solving Master Equations¶

Solving Master Equations¶

A master equation

can be simplified into

To figure out the elements of this new matrix \(A\), we need to understand how to combine the RHS of master equation. The matrix form of the master equation (22) is

Since \(A_{mm} = \sum_n R_{nm}\) is just a real number, we can combine the two matrices on the RHS,

The \(\mathbf A\) matrix is then defined as

The abstract form of the master equation (23) is

To solve it, we need to diagonalize \(\mathbf A\) so that the solution can be written down as

In general, the diagonailization is achieved by using an invertible matrix \(\mathbf S\),

in which \(\mathbf A_{\text{diag}} = \bf S^{-1} P S\) and \(\mathbf P_{\text{diag}} = \bf S^{-1} P\).

Food for Thought

Is there a mechanism that ensures the \(\mathbf A\) is invertible ? If \(\mathbf A\) is defective , none of these can be done. Do all physical systems have invertible \(\mathbf A\)?

Quantum Mechanics

The master equation (24) is similar to the Schrodinger equation quantum mechanics

It is also quite similar to the Liouville equation,

Solving Master Equations: Fourier Transform¶

Fourier transform is a fast and efficient method of diagonalizing \(\mathbf A\) matrix.

We consider the case that a coarse-grain system with translational symmetry. The values of the elements in \(\mathbf A\) matrix only dependends on \(l:= n-m\), i.e.,

For translational symmetric system, a discrete Fourier transform is applied to find out the normal modes. Define the kth mode as

Multiply \(e^{ikm}\) on both sides of the master equstion and sum over \(m\), we get

With the definition of the kth mode defined in (25), the master equation can be written as

which “accidently” diagonalizes the matrix \(\mathbf A\). Define the kth mode of \(\mathbf A\) as \(A^k = \sum_{l=m-n} e^{ik(m-n)}A_{m-n}\). The master equation

is reduced to

Note

Note that summation over n and m is equivalent to summation over n and m-n.

Finally we have the solution for the normal modes,

To find out the final solution, perform an inverse Fourier transform on the kth mode,

Important

Due to the Born van Karman BC, we chose,

which leads to

A discrete transform will become an integral if we are dealing with continous systems. It is achieved by using the following transformation,

This transformation is important because the discrete transform has \(\frac{1}{N}\sum_k\) in it.

Finite Chain with Nearest-Neighbor Interactions¶

Fig. 22 Finite chain with nearest-neighbour interactions

On a 1D finite chain, the transfer rate is

which leads to the following master equation,

Perform a discrete Fourier transform on equation (26),

Collect terms, we get

The solution for the kth mode is solved,

To retrieve the solution to the original master equation, an inverse Fourier transform is applied,

Apply Born-Von Karman boundary condition, we find that \(k\) is quantized,

Matrix Form¶

The matrix form of the equations makes it easier to understand. Here we work out the 1D finite chain problem using the matrix form explicitly.

First of all, citing the master equation (26), we have

We rewrite it in the matrix form

Matrix Form Makes a Difference

An easy method to get the matrix form is to write down the \(\mathbf R\) matrix whose diagonal elements are all 0s. We construct the \(\mathbf A\) matrix by adding a minus sign to all elements and use the sum of the original elements at the diagonal in the corresponding line. One should pay attention to the signs.

The additive of the matrices makes it possible to decomposed a complicated matrix into several simple matrices.

To solve this equation, we diagonalize the 6 times 6 matrix. Similar to the discrete Fourier transform we used in the previous method, we have

We imediately recognize the solution,

Eigenvalue Problem

Note that the elements of the diagonailized \(\mathbf A_{\text{diag}}\) matrix are just the eigenvalues of \(\mathbf A\) matrix with their corresponding eigenvectors. The descrete master equations is equivalent to the eigenvalue problem of \(\mathbf A\) matrix.

Infinite Chain with Nearest-Neighbor Interactions¶

For an infinite chain, we have exactly the same master equation. The difference lies in the boundary conditions. For infinite chain, we have \(N\rightarrow \infty\) and

Validate the Relation

The way to check this result is to check the sum. Apply the operators on unity, both sides should converge to 1,

The solutions are

or

where \(I_m(2Ft)e^{-2Ft}\) is the propagator.

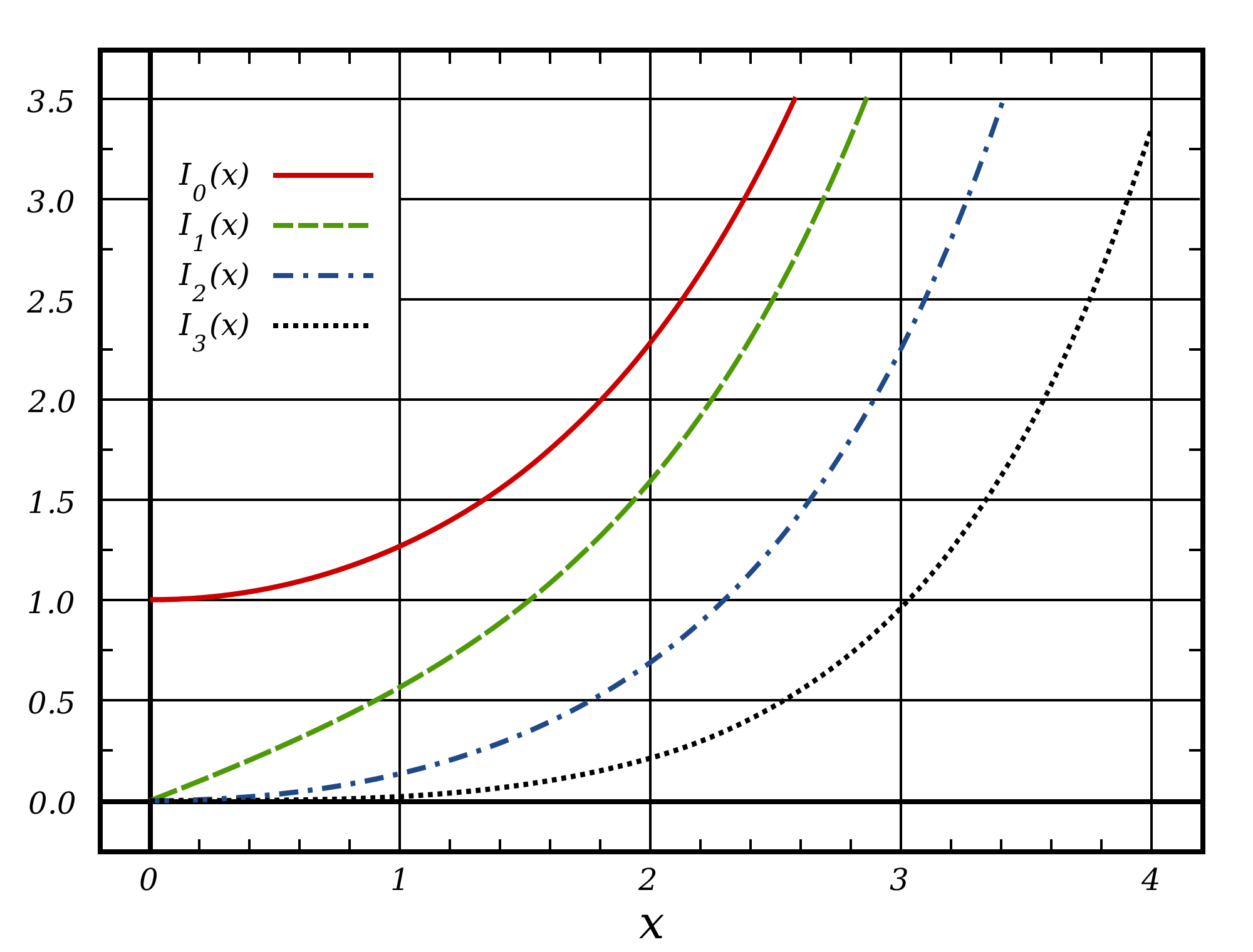

Modified Bessel Function

Vocabulary: The modified Bessel function is defined as

Since the argument has imaginary part in it, it is also called the Bessel function of imaginary argument.

Fig. 23 Source: BesselI_Functions_(1st_Kind) @ Wikipedia

2D Lattice¶

A 2D lattice is shown in Fig. 24.

Fig. 24 An equilateral triangle lattice. Source: Equilateral Triangle Lattice by Jim Belk @ Wikipedia

We require it to have translational symmetry in both x and y directions. The solution is the product of solutions to x and y directions,

Continuum Limit¶

For a continuum system,

We can identify the form of derivatives on the right hand side but it becomes zero if \(F\) is a constant when we take the limit \(\epsilon \rightarrow 0\).

On the other hand, \(F\) should increases when the two particle sites becomes closer. To reconcile the zero-right-hand-side problem, we assume that

The continuum limit of our master equation becomes the diffusion equation,